Adaptive Softmax

A TF2.0+ implementation of the Adaptive Softmax layer.

For a full description of the project, please see my TowardsDataScience post: https://towardsdatascience.com/how-to-overcome-the-large-vocabulary-bottleneck-using-an-adaptive-softmax-layer-e965a534493d

For the code, please follow the link to my github: https://github.com/Jmkernes/PAR-Transformer-XL/blob/main/adaptive_softmax.py

The goal of this project is to create a TensorFlow 2.0+ implementation of the adaptive softmax, outlined in the paper “Efficient softmax approximation for GPUs” .

For large vocabularies, the final dense layer can become prohibitively expensive, making alternative layers a must. You can easily achieve anywhere from 2x-10x speedups in both training and inference.

The layer consists of two stages

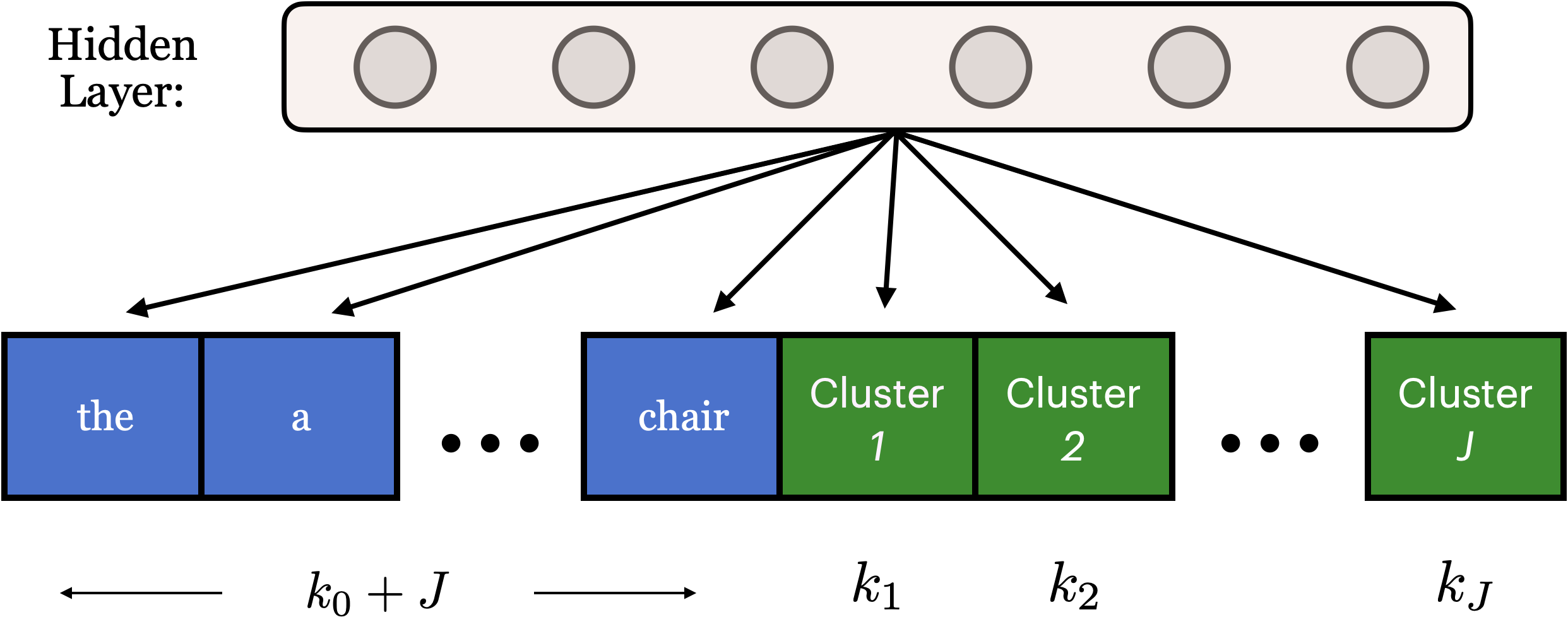

- A segmentation into a head cluster, and \(J\) tail clusters. We perform the usual softmax over the head cluster, and add \(J\) additional slots for the class probabilities that a word resides in the tail clusters.

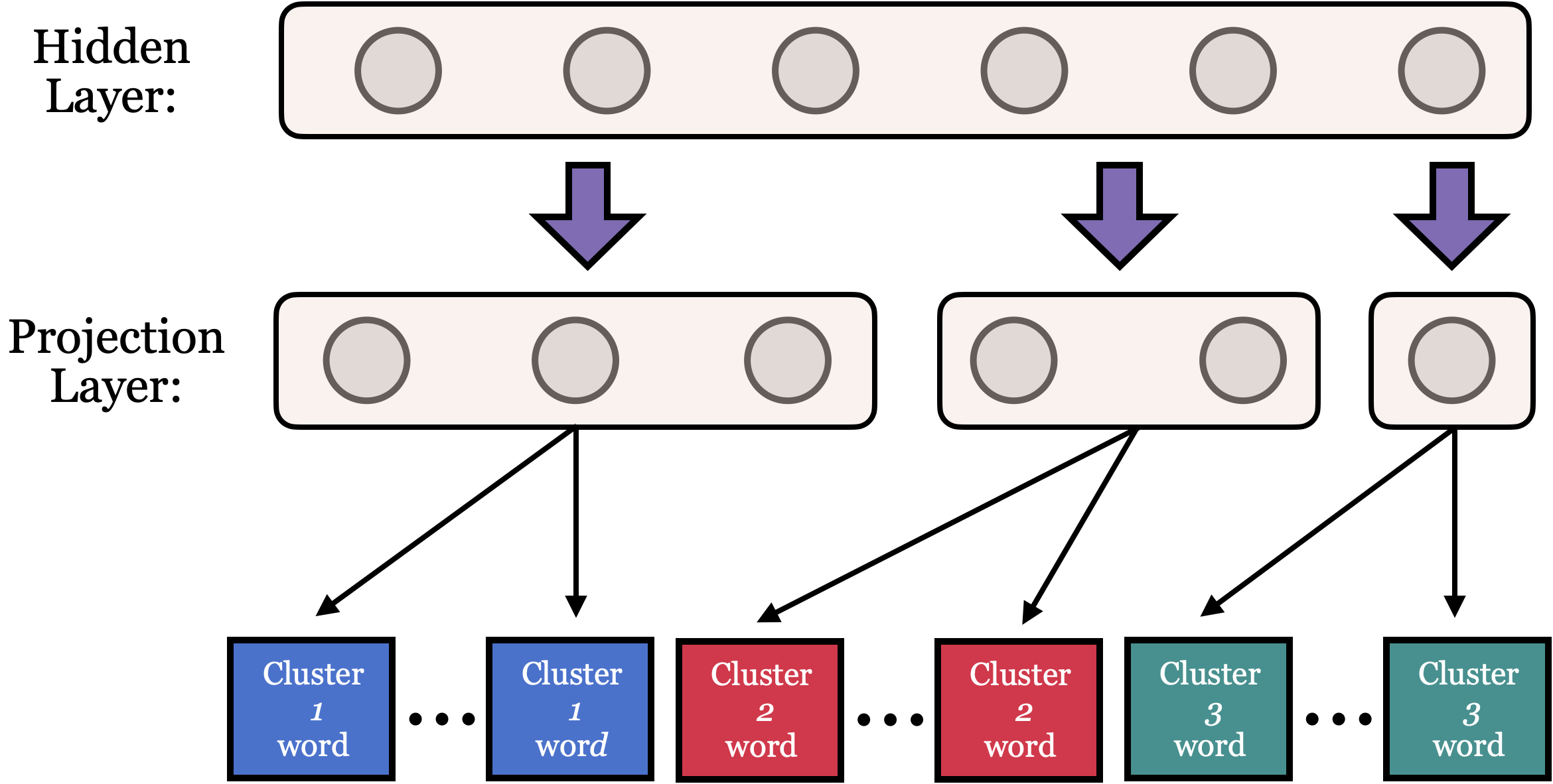

- A dimensional reduction of the final layer hidden state via linear projections, for the inputs feeding into the tail cluster softmaxes. The softmax over tail clusters is only performed for labels that fall in those clusters. When we do have to compute these softmaxes, they are a) much less frequent by defintion, and b) much less computationally intensive due to the dimensional reduction.

A schematic of the projection procedure is shown below:

Again, for the full project description and code, please see my TDS article and repository respectively.